Memory-Chip Boom Driven by AI Demand

The Memory-Chip Industry Enters a New Era of Growth

The memory-chip industry, known for its unpredictable fluctuations, is experiencing a prolonged period of growth driven by the rapid expansion of artificial intelligence (AI) technologies. Companies like Samsung Electronics, SK Hynix, and Micron Technology are seeing increased demand for their products, which are essential for both training and running AI models.

According to research firm TrendForce, the revenue from DRAM chips—commonly used in computers and other devices—is expected to reach around $231 billion next year, a significant increase compared to the lowest point in 2023. This surge in demand has led to impressive financial results for major players in the sector.

Samsung reported a 21% increase in net profit for the July-September quarter, reaching approximately $8.6 billion. Its chip division also saw record quarterly revenue and operating profit, rising nearly 80% to about $4.9 billion. Similarly, SK Hynix announced record earnings, with its third-quarter net profit more than doubling to around $8.8 billion. The company stated that the memory market has entered a “super boom cycle,” with its capacity for next year already fully booked.

Micron also saw strong performance, with its net profit tripling to $3.2 billion for its most recent quarter. These figures highlight the growing importance of memory chips in the global technology landscape.

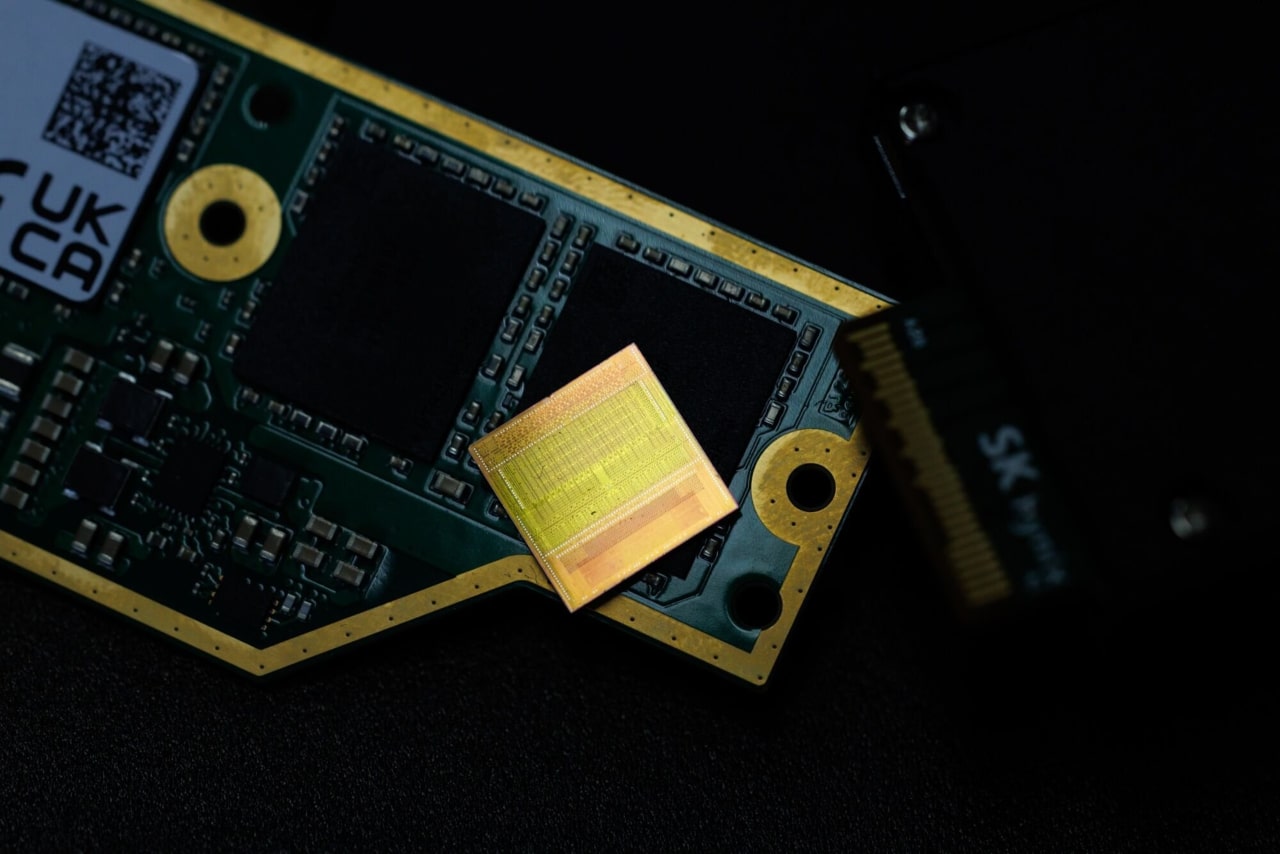

The Role of High-Bandwidth Memory in AI

As AI continues to evolve, specialized types of memory, such as high-bandwidth memory (HBM), have become increasingly important. HBM is designed for use in training AI models and allows larger amounts of data to be processed simultaneously when combined with GPUs. This type of memory is crucial for AI servers that handle complex computations during model training.

In October, OpenAI, the company behind ChatGPT, signed letters of intent with Samsung and SK Hynix to collaborate on the Stargate infrastructure project. OpenAI's demand is expected to reach up to 900,000 DRAM wafers per month, which exceeds the current HBM capacity. OpenAI CEO Sam Altman described the two companies as key contributors to global AI infrastructure.

While HBM is central to AI, traditional memory chips are also in high demand. Major U.S. data-center companies, including Amazon, Google, and Meta Platforms, are purchasing these chips for regular servers. However, supply remains tight because manufacturers have focused on expanding HBM production.

The Broader Implications for the Memory Market

Although HBM is closely associated with AI, conventional memory chips are still valuable for certain AI tasks, particularly AI inference. This process involves using a trained model to generate outputs, such as responses from a chatbot. For some tasks, like storing and retrieving large amounts of data from an AI model, traditional servers can be more cost-effective.

Peter Lee, a Seoul-based semiconductor analyst at Citi, noted that as AI models become more widely used, data centers are expanding to manage the increased workload. He emphasized that the memory market is shifting toward a new paradigm, with demand spreading across various product areas.

SK Hynix’s chief financial officer, Kim Woo-hyun, highlighted this transformation, stating that the demand for memory chips has begun to spread to all product areas. The HBM market alone is expected to grow by an average of more than 30% over the next five years, according to SK Hynix.

Challenges and Uncertainties

Despite the current boom, the memory-chip industry is known for its extreme boom-and-bust cycles. A recent downturn occurred just a few years ago when pandemic-era demand declined before the AI boom took off. In 2023, SK Hynix reported a net loss of over $6 billion.

TrendForce’s senior research vice president, Avril Wu, believes the current supply crunch will persist through 2026 and possibly into early 2027. However, there is some skepticism about whether the massive long-term infrastructure spending plans by companies like OpenAI will be fully realized.

Sanjeev Rana, a semiconductor analyst at CLSA, expressed concerns about the feasibility of OpenAI’s ambitious projections. He pointed out that the demand for memory could surpass current and planned capacities, raising questions about whether the company will meet its goals.

As the AI industry continues to expand, the memory-chip sector is poised for continued growth. However, the challenges of maintaining supply and meeting demand will remain critical issues for the industry in the coming years.

Post a Comment